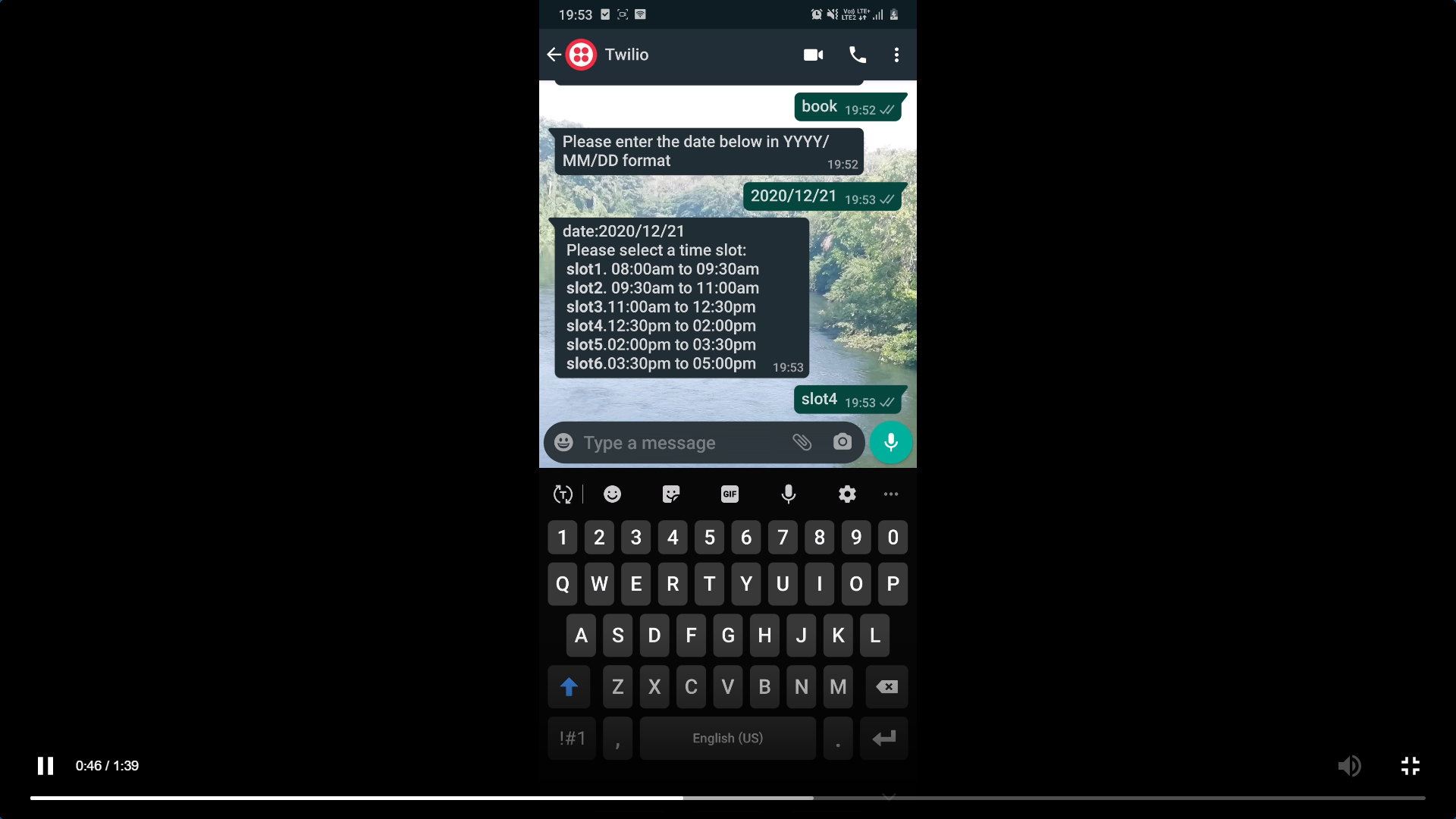

AI and Robotics Center (AIR)

The AIR Lab is an initiative brought to reality in VIT APUniversity and its the first

of its kind in the country.

Set up with the aim of learning about the latest

technologies of the field like Deep Learning, Machine

Learning and Robotics,

the AIR Lab has given students a platform to not only learn about them but

work with them to build something unique.

The lab makes an ideal

technology-rich environment to promote student-driven learning and provides

a

new approach to learning rather than the customary way.

The lab has grown

with more students coming in with unique ideas worth working on. With the

support of

the faculty, the aim is to be a place of harmony for ideas and latest

technologies, and make them a

reality.

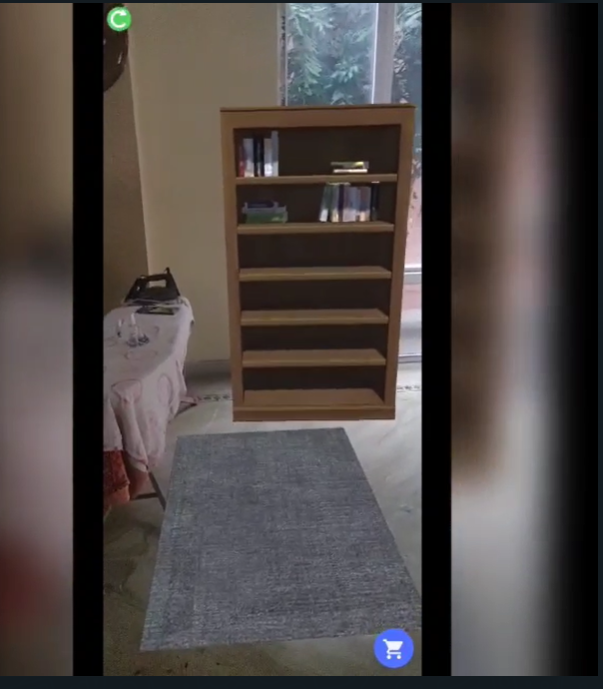

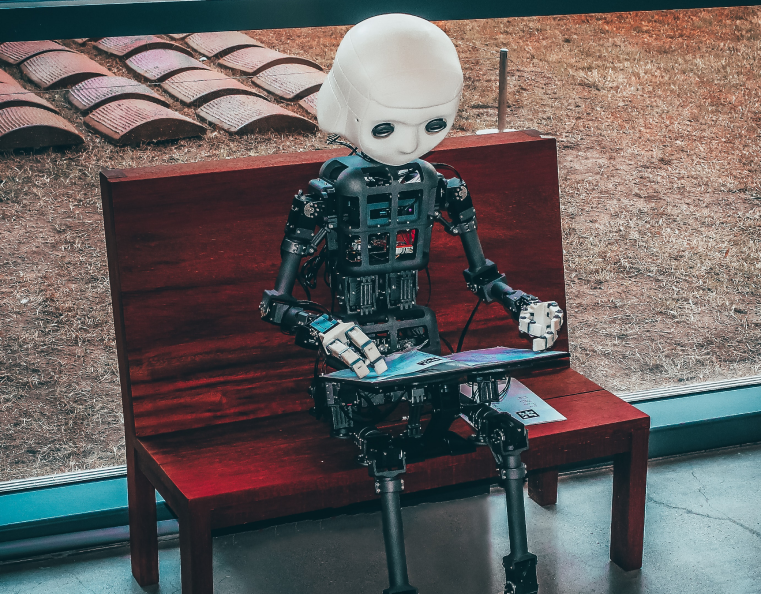

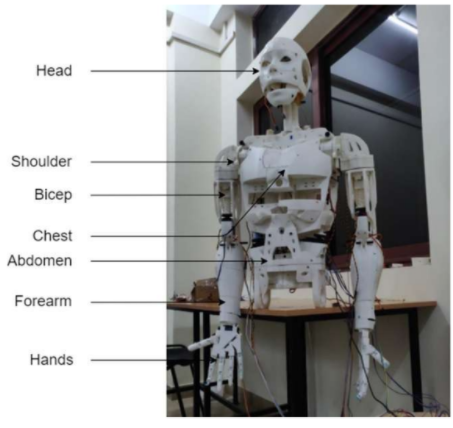

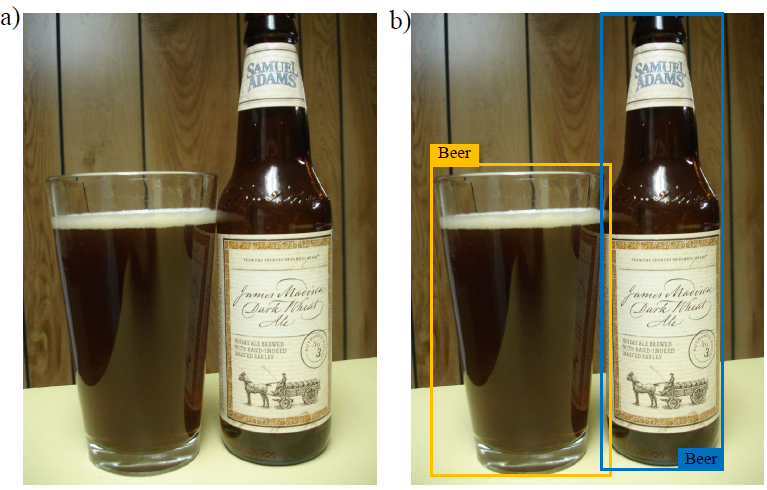

Thrust Areas

Projects

Members

.png)